Google, OpenAI, Tesla, ByteDance: Who Just Took the Lead in the AI Wars?

We’re in the middle of a platform war, and the battlefield is your daily workflow. From Google’s Gemini expanding across every device to Claude, Codex, and Manus redefining what agents do, the era of passive prompts is over. These aren’t chatbots. They’re co-workers, co-directors, and co-pilots.

Let’s unpack the upgrades, the plays, and what they mean for developers, creators, and builders.

🚨 Google Just Went Beast Mode at I/O 2025

Google didn’t launch features. It detonated an entire product reset. AI everywhere. Hardware, software, search, assistants, agents, glasses, video; nothing untouched.

Why it matters: This wasn’t an iteration. It was a platform pivot into a fully AI-native ecosystem.

Here's the breakdown.

🔥 Gemini Ultra: $249/month for AI with a Brain

The new Gemini Ultra plan ($249.99/month, U.S. only) is less a subscription and more a compute buffet. You get:

- Veo 3 video generation with sound effects and real dialogue

- DeepThink multi-path reasoning mode

- Flow, Google’s AI filmmaking studio

- Notebook LM+

- 30TB cloud storage, YouTube Premium, and more

First-time users get 50% off for three months.

Why it matters: A single Veo 3 render can use more GPU than most indie devs burn in a week. This is Google's moonshot bet on serious creators, developers, and enterprise users going all-in on AI production.

🧠 DeepThink Mode: Multiverse Reasoning for Gemini 2.5 Pro

Standard Gemini 2.5 Pro already wins LM Arena categories. But DeepThink adds a second chain of thought before responding. It simulates multiple solution paths, crushes math/coding benchmarks, and rivals OpenAI’s O1/O3 Pro.

Status: In limited testing via the Gemini API. Open release TBD.

Why it matters: DeepThink moves Gemini from reactive chatbot to deliberative AI. Think AlphaZero for language.

🎬 Veo 3 + Flow: AI Cinema Enters the Chat

Veo 3 is Google’s new flagship video model. Generate 30s HD clips with synced audio, dialogue, ambient noise, and even footsteps. Think “AI film scenes,” not just animations.

- Flow: Multimodal workspace for chaining scenes, remixing references, and extending clips.

- Imagen 4: Hyper-detailed stills with fabric, water, and fur accuracy. 10x speed boost coming.

Why it matters: Video generation just crossed the cinematic uncanny valley.

🤖 DeepAgent: Build GPT-Style AI, Deploy Anywhere

DeepAgent lets devs build fully custom GPT/Gemini-style bots with personality, brand them, and embed them directly into their apps or sites.

- Connect to Google Drive, SharePoint, web docs, or live sources

- Automate workflows (Slack, Jira, GitHub)

- Build dashboards, write docs, act as support, or a personal assistant

Why it matters: It’s not a plugin. It’s your white-labeled GPT on your stack.

📞 Gemini Live: Real-Time AI on Your Phone

Gemini Live now supports screen sharing and camera input on Android/iOS. Ask questions in real-time, flip your camera, and get context-aware responses that interact with Maps, Calendar, Gmail, and Drive.

Why it matters: Gemini can now read your world, remember it, and speak like you.

🔍 AI Mode in Search: Google Skips the Blue Links

Search now includes a dedicated AI mode tab with conversational answers, citations, and soon, live charts.

- AI Mode can book tickets, pick seats, and handle checkout in real time.

- 1.5B users already get AI overviews. This takes it full agent.

Why it matters: This is Google trying to out-AI its search business before OpenAI does.

📹 Starline Becomes Beam: 3D Video Calls + Voice Translation

Google Meet now includes Beam (formerly Starline) for 3D holographic telepresence and live speech translation that preserves voice/tone.

Beta: English-Spanish for AI Pro and Ultra users.

Why it matters: It’s not Zoom. It’s teleportation with real-time translation.

👨💻 Dev Tools: AI-Coded Apps in Seconds

- Stitch: Text-to-UI generator (HTML + CSS)

- Gemini Flash: Ultra-fast LLM optimized for speed/cost

- Gemini AI Studio: Now supports Flash + Imogen endpoints

- Jules: Code agent that handles PRs and tickets

- Android Studio: Adds build journeys + crash insights

Why it matters: Gemini isn’t just a chatbot; it’s a dev team.

⌚ Pixel, Play, and Devices

- Wear OS 6: Unified tile fonts + dynamic theming

- Google Play: Topic-browsed content, multi-sub bundles, fatal bug rollbacks

- Gemma 3N: New 4B model for on-device multimodal AI

- SynthIDetector: Public tool to detect invisible watermarks in media

Why it matters: Generative AI is now embedded in hardware, app stores, and content moderation.

🧪 Gemini Diffusion + Android XR Glasses

- Gemini Diffusion: Experimental model that generates full app prototypes in seconds

- Android XR: Real-world AR with full Gemini integration; ask it questions, get 3D overlays

Why it matters: Google’s LLMs now create apps and overlay reality.

🦾 Real Robots, Real Jobs: The Humanoids Are Here

Just a few years ago, humanoid robots were futuristic mascots, flashy, fragile, and mostly theatrical. Today? They’re lifting patients, patrolling buildings, working in shipyards, and taking on outdoor labor.

Why it matters: We're witnessing the transition from robot demos to deployment. Here’s how it's happening across sectors, from hospitals to heavy industry.

🏥 Foxconn + NVIDIA Launch Nurabot, the AI Nursing Assistant

At Computex, Foxconn, NVIDIA, and Kawasaki dropped Nurabot, a humanoid nurse built to work in hospitals, not labs. It can:

- Monitor vitals and alert caregivers in real time

- Help patients move, deliver meds, and offer companionship

- Navigate autonomously, understand language, and adapt over time

It’s already in trials at Taiwan’s Taichung Veterans General Hospital.

Why it matters: Nurses are burned out. Populations are aging. Nurabot isn't just a tool; it's a potential new member of the care team.

🛡️ Singapore Unboxes Its First Humanoid Security Robot

Certus just deployed its first full humanoid robot (from Agibot) as a research testbed. Use cases include:

- Building security

- Customer service

- Facilities management

It integrates with Certus’ orchestration platform, Mozart, and will be evaluated in real-world environments.

Why it matters: Singapore’s public and gradual rollout reflects a growing global trend where humanoids are introduced not to replace people but to enhance teams.

🚢 $27M for Persona AI’s Shipyard-Ready Humanoids

Persona AI, founded by ex-NASA and Figure AI talent, just raised $27 million to deploy humanoids into harsh industrial sites.

- Use cases: Shipbuilding, manufacturing, rugged outdoor work

- Backed by Hyundai for live shipyard deployment within 18 months

- Offered via robotics-as-a-service, not expensive one-off sales

Why it matters: This isn’t speculative funding. It’s deployment-driven capital for robots that replace labor in places where humans burn out.

🔩 RoboForce Titan: Outdoor Robot With Precision and Power

Titan is a rugged, modular robot designed for hostile environments.

- Handles mining, solar fields, manufacturing, and even potential off-world use

- Precision: 1 mm

- Load: 40 kg

- Shift: 8 hours

- Actions: Pick, place, press, twist, connect

Total raised: $15M | Founder: Leo Ma | Location: Silicon Valley

Why it matters: Titan ditches the "generalist" fantasy and focuses on the five core movements that most labor tasks boil down to.

🦿 Carnegie Mellon’s Falcon: Forceful, Coordinated Humanoid Motion

Falcon is a dual-agent control framework that enables humanoids to apply force with their arms while walking.

- Tested on Unitree G1 and Booster T1

- Applies up to 100N of force while maintaining balance

- Doubles arm accuracy vs older systems

Limitations: Struggles with torque and body-wide force distribution.

Why it matters: Most robots fall over when they try to "do things." Falcon brings us closer to humanoids that can truly work like humans.

📈 The Bottom Line

Real humanoid labor is no longer sci-fi. These aren't toys or tokens; they're tools:

- Nurobot eases hospital burnout

- Certus prototypes real-world public interaction

- Persona deploys to shipyards

- RoboForce scales up dirty, dangerous tasks

- CMU trains robots for real-world coordination under force

This is a new industrial class of robotics, built for the grind, not the showroom.

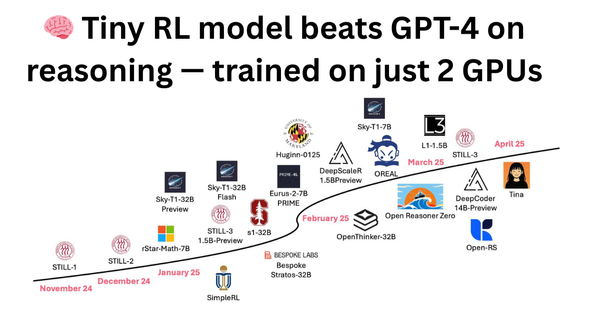

🚀 AI Just Rebooted Itself

Open-source beats the giants. NVIDIA flips the compute economy. Apple admits Siri is broken. YouTube targets your emotions. Flowith launches agents that finish full apps solo. Every layer of the stack just shifted. Here’s the chaos, condensed.

Why it matters: We’re past the phase of flashy demos. This is AI going full-stack, decentralized, emotional, embodied, and autonomous, all at once.

🧠 Intellect 2: The First Decentralized LLM That Works

Prime Intellect dropped Intellect 2, a 32B-parameter reasoning model trained across a global swarm.

- No datacenter, rollouts, training, and weights spread across permissionless nodes

- Prime RL handles the split: rollout → training → Shardcast sync

- TopLock slashes bad actors using on-chain LSH fraud detection

- Asynchronous RL means no idle GPUs, constant compute

- Open-sourced: code, weights, full stack ready for contributors

Why it matters: It’s a blueprint for decentralized AI that actually scales and self-heals. Fully open. No mega-cloud required.

🧩 Flowith’s Neo Agent: Build Full Projects Autonomously

Flowith’s Neo isn’t just a chatbot; it’s a 1,000-step autonomous agent that finishes entire projects.

- Visual canvas shows every action and plan

- Works 24/7, remembers everything, handles apps, research, sequences

- Sub-agent system = build AI teams under one command

- Users built: 3D tank games, simulators, finance dashboards, social pipelines

- No code. No babysitting. Mobile-ready.

Why it matters: Neo is the first real hint of AI as an autonomous workforce, not just a copilot.

💻 NVIDIA DGX Cloud Lepton + NVLink Fusion = Planet-Scale Compute

Jensen Huang didn’t just drop chips; he built an AI compute marketplace.

- Lepton: Stitch GPU capacity across CoreWeave, SoftBank, Lambda, more

- You rent slots; it handles pooling, region routing, and scheduling

- NVLink Fusion: Snap in any AI chip (Qualcomm, Marvell, ARM, etc.) into NVIDIA’s fabric

Why it matters: NVIDIA just made compute modular, scalable, and frictionless with AI infrastructure as a utility grid.

📱 Apple Finally Rebuilding Siri with LLMs

Gurman confirmed it: Siri is getting replaced. Apple’s old rule-based bot is out. New plan:

- Train Apple Intelligence, a custom LLM stack

- Combine on-device inference + cloud for heavy lifting

- Target: Demo-ready by next iPhone cycle

Behind the scenes:

- GPU investment ramping up

- John Gianandrea finally greenlit the generative reboot

- Internal team calls legacy Siri “whack-a-mole hell.”

Why it matters: Apple knows it missed the wave. This is its comeback bet: a private, on-device AI that doesn’t share your data with the cloud.

🎯 YouTube’s PeakPoints: Emotion-Triggered Ads

YouTube is testing Gemini-powered mid-rolls that drop after emotional peaks.

- Analyzes frames + transcript

- Flags climax moments (proposal, plot twist, glitch)

- Delays the ad until after the emotional hit

Demo: Marriage proposal → cheers → fade out → ad

Why it matters: Emotionally-timed ads = higher recall + CPMs. Creators win, brands win. Viewers? Jury’s still out.

🌐 China’s AI Juggernaut: Real-Time Gen, Full Video Studios, and Multi-Agent Research Teams

Tencent, Alibaba, and ByteDance just redefined the edge of AI. Real-time image generation. End-to-end video editing. Vision-language models outperforming OpenAI. Autonomous agent systems that can run research pipelines solo.

Why it matters: Every assumption about where AI was weak, visuals, video, research automation, just got overturned. Here's the full breakdown.

🎨 Tencent’s Hunyuan Image 2.0: Real-Time, High-Res Image Gen

Tencent launched Hunyuan Image 2.0, their next-gen image model with near-instant generation.

- Sub-second response time for text, voice, or sketch prompts

- Real-time drawing board: update sketches and see color, lighting effects immediately

- Accepts sketch input, live voice, and freeform ideas for generative design

Why it matters: Designers can now iterate visually in real time. No more waiting. Just create.

🎥 Alibaba’s VACE: One-Click Video Studio in Your Browser

Alibaba's VACE (Video All-in-One Creation and Editing) is an open-source AI studio that does it all, generate, edit, animate, and transform clips in one run.

- Supports text-to-video, mask edits, object replacement, scene extension, and multi-ref blending

- Handles up to 720p resolution, with roadmap to scale higher

- Available on Hugging Face + ModelScope, runs locally with CLI or Gradio UI

Why it matters: Forget jumping between tools. VACE is Adobe Premiere + After Effects + Runway ML, in one open platform.

🧠 ByteDance Drops Seed 1.5 VL: A Multimodal Giant

Seed 1.5 VL is a vision-language model built to dominate general-purpose reasoning.

- 532M vision encoder + 20B MoE LLM

- Tops 38 of 60 public benchmarks, beating OpenAI and Anthropic in multiple categories

- Uses dynamic resolution sampling for smarter video analysis

- Excels at image classification, object counting, document parsing, spatial reasoning, and OCR

Why it matters: Compact, efficient, and state-of-the-art, Seed 1.5 is multimodal AI built for real-world tasks, not just leaderboard flexing.

🕸️ ByteDance’s DeerFlow: Multi-Agent Research Automation Framework

DeerFlow is ByteDance’s open-source orchestration system for building modular, agent-powered research pipelines.

- Built with LangChain + LangGraph

- Agents handle task planning, search, code, data analysis, and reporting

- Visual graph editor + web UI for tracing workflows in real-time

- MIT licensed, supports local/cloud runs, and connects to TTS, code exec, and web APIs

Why it matters: This is AI research automation at scale, with transparency, modularity, and human-in-the-loop oversight baked in.

🧠 The AI Agent Wars Just Escalated

OpenAI turns ChatGPT into a full-stack engineer. China’s new image agents think. Claude’s next-gen upgrade quietly brews. Google redefines search, again. Everything is pointing toward one thing: autonomous, purpose-built AI agents that work.

Why it matters: It’s not just about chat anymore. Agents are learning, building, fixing, iterating, and replacing whole workflows.

🧰 OpenAI Codex: Your New Teammate Writes, Tests, and Commits Code

Codex just dropped in ChatGPT (Pro, Team, Enterprise) as a secure, isolated development agent.

- Runs in a sandboxed environment with no internet exposure

- Handles real tasks: feature building, bug fixing, test running, and repo Q&A

- Codex-1 = specialized O3 model trained on pull requests, team workflows, and coding patterns

- Logs, results, and commits come with full traceability

- CLI version Codex Mini (based on O4 Mini) optimized for terminal speed

Benchmarks: 75% pass-at-one on SuiteBench, significantly better than O3 High (67%).

Why it matters: Codex doesn’t just assist. It acts. Real engineering work, handled quietly in the background while you focus on the bigger picture.

🎨 Manus AI: Not Just a Generator — A Visual Thinker

Manus (from Butterfly Effect AI) is a closed-beta autonomous image agent that behaves like a creative director.

- Multi-agent architecture: planning, layout, style matching, verification

- Uses design trends, brand libraries, spatial engines, not just prompts

- Applies color theory, picks real-world furniture (yes, even IKEA), maps layouts

- Built for: e-commerce, ad creative, interior architecture, product mockups

Why it matters: Manus isn’t here for pretty pictures; it solves visual problems. Purpose-driven output at production quality.

🧠 Claude’s Silent Evolution: “True Agents” Are Coming

Anthropic is quietly prepping the next major Claude upgrade, potentially Claude 3.8 or Claude 4, codenamed Neptune.

- Internal leaks point to agentic behavior: reasoning, acting, retrying, autonomously

- Planned tools: real-time tool calling, multi-step planning, complex flow integration

- Transparency focus: developers may see step-by-step tool chains and revisions

- Upgrades are coming to both Sonnet and Opus variants

Why it matters: This could make Claude the most transparent and controllable agent on the market, the opposite of the “black box” chatbot.

🔍 Google AI Search: Gemini Overviews Go Full Assistant

Google is transforming Search into a memory-rich conversational agent.

- Over 1.5B users now use Gemini-powered AI overviews

- New feature: AI Mode, a Gemini chat overlay inside Search

- Persistent memory, turn-by-turn refinement, inline source handling

- Competing directly with ChatGPT and Perplexity on native search behavior

Why it matters: Google’s future is less about ranking pages and more about becoming your default research assistant.

📈 Apple’s Safari Move Threatens Google’s Empire

Rumors are swirling that Apple may replace Google Search in Safari, either with its LLM or a partner like OpenAI.

- Safari dominates mobile traffic

- Even a partial switch could hit Google’s revenue and user base

- Google’s stock dipped the moment the rumor surfaced

Why it matters: Mobile search = money. If Apple flips that default, Google has a serious fight on its hands.

📊 AI Agent Roundup

🧩 AI Phrase of the Week “Agentic Behavior”

Definition: The ability of an AI model to reason, plan, act, self-correct, and interact with tools or environments without being spoon-fed instructions.

Why it matters: We’re moving beyond single-shot replies. The smartest models now think in chains, call APIs, retry tasks, and explain their steps autonomously.

Bottom Line

We’re not watching AI evolve. We’re watching software disappear into agents that act. No UIs, no commands, just results. Whether you're writing code, generating campaigns, managing workflows, or exploring data, these new models don’t assist, they own tasks.

So the real question now is: Which agent will work beside you next week, and which ones will replace a whole department?

Catch you in the next issue.

If you are into content creation, here are two free tools for you to check out:

🎥 Taledy has a suite of tools for creating videos, transcribing, creating shorts, and much more. Check it out!

🤖 Vidyne provides a hands-off way to manage your YouTube channel by automatically creating videos and uploading them to your channel. Try it out!

The Taledy AI Team