Is LoRA More Powerful than Full Weight Tuning for Reasoning?

Tiny Model, Massive Reasoning: RL + LoRA Hits New Benchmark Highs

Prompting can only take you so far. Reinforcement learning (RL) gets expensive fast. And when it comes to training models that reason, most people assume you need billions of parameters and a heap of GPUs.

Tina flips that assumption on its head.

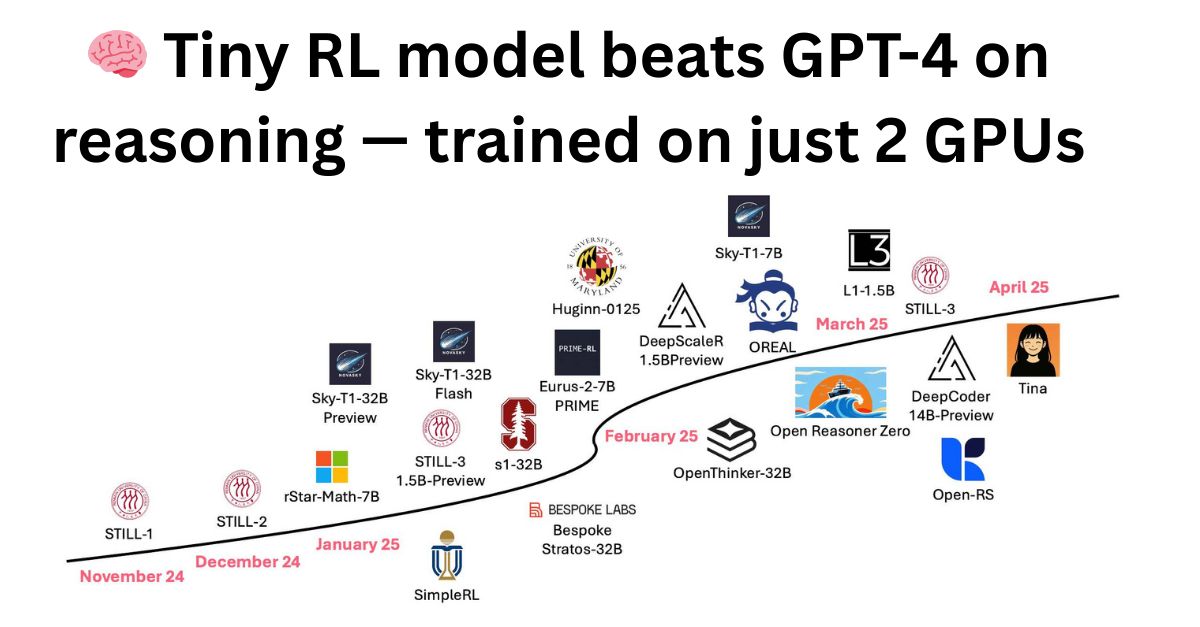

Meet Tina: a family of tiny reasoning models fine-tuned via low-rank adaptation (LoRA) and reinforcement learning, at the cost of 2 cups of coffee. Tina isn’t just adorable in size (1.5B base params). It's competitive with state-of-the-art (SOTA) reasoning models that use full parameters, and it often performs better. All for less than $10 of compute.

Let’s unpack what makes Tina tick.

Can Tiny Models Reason?

That’s the central question behind Tina. The team started with a simple hypothesis: maybe you don’t need giant models to get strong reasoning performance. Maybe you just need the right training signal and a cost-efficient way to inject it.

They took a 1.5B distilled DeepSeek-Qwen base model, already known for decent reasoning out of the box, and applied LoRA-based RL to nudge it toward better reasoning chains. The choice of this model enables researchers to accurately measure the impact on results after applying RL. The choice of this model makes experimentation faster and cheaper.

The result? A 20%+ performance gain and 43.3% Pass@1 on AIME24 at just $9 total cost, including evaluation.

The term pass@1 refers to a performance metric used to evaluate models, particularly large language models (LLMs), on tasks like code generation or problem-solving. It measures the probability that the model's first attempt at solving a problem is correct. In other words, if a model is given a set of problems and it provides a single solution for each, the pass@1 score represents the percentage of problems for which that first solution is correct. In the context of 43.33% pass@1 accuracy on AIME24, this means that the model correctly solved approximately 43.33% of the AIME 2024 problems on its first attempt. The AIME (American Invitational Mathematics Examination) is a challenging math competition, and achieving a pass@1 accuracy of 43.33% indicates a significant level of proficiency in solving complex mathematical problems without multiple attempts.

Overall comparison between Tina and baseline models

What Makes Tina Different

Tina's secret sauce is hidden in its minimalist stack:

- Tiny Backbone: A distilled 1.5B parameter Qwen model.

- LoRA for RL: Instead of updating all parameters, Tina updates <1% of them using LoRA, making training drastically cheaper.

- No Hyperparameter Sweeps: Just fixed configs reused across datasets.

- Hardware? Two L40S GPUs. That’s it.

It’s not just the cost that’s cut. Training time is too. Most Tina models trained to their best checkpoint within 20–50% of one epoch. The team also benchmarked Tina across six reasoning-heavy datasets (AIME, AMC, MATH500, GPQA, Minerva), consistently outperforming full-model baselines.

Training was done using OpenR1, an open implementation of DeepSeekR1. Parameter selection was done using OpenRS and OpenR1. Training was done by co-locating the RL training process and the vLLM on the same two GPUs by constraining vLLM’s GPU memory usage.

Approximate training FLOPs vs. reasoning performance comparison between Tina and baseline models.

What’s Happening Under the Hood?

The team hypothesizes that LoRA’s effectiveness here comes from format adaptation. LoRA doesn't overwrite the model’s core knowledge. It tweaks how that knowledge is presented.

This is evident in the training dynamics. Tina shows a clear “phase transition” in training, where completion length dips, format reward spikes, and accuracy starts to climb. This phase is when the model locks into the “reasoning style” being rewarded by RL.

In short, Tina learns how to think in steps.

Tina vs the Field

On average, Tina matched or beat baseline models trained with full-parameter RL.

Notable results:

The results from Tina-Open-RS2 came from a dataset of only ~7k examples.

Tina Is for Everyone

Tina is more than a model. It’s a proof of concept that efficient RL reasoning is democratizable. The total cost of reproducing all the experiments in the paper is just $526. The cost for reproducing the best checkpoint is just $9.

Every code snippet, dataset split, and checkpoint is open-source. Tina is an open invitation to researchers and builders alike: you don’t need to be OpenAI to train reasoning models.

What May Be Next?

Tina is just the beginning. I anticipate more work exploring various topics.

- Porting the Tina approach to domains like coding and scientific QA.

- Fine-grained reward engineering using LoRA.

- Multi-agent reasoning scaffolds on small models.