LLM Benchmarks Decoded: What Leaderboards Tell Us About AI

From ARC to ArenaHard: What Today’s AI Benchmarks Reveal—and What They Don’t

In the world of large language models (LLMs), benchmarks have become the industry’s truth serum.

Everyone has a model. Everyone has a better model. Everyone posts their shiny leaderboard numbers on social media. But what do these numbers mean? Why do some models that ace academic benchmarks still underperform in the real world? And why are some benchmarks now considered insufficient, or even misleading?

Let’s unpack what these benchmarks evaluate, why they matter, and how they fit into the broader effort to develop truly helpful, reasoning-capable AI.

LLM Benchmarks

A few years ago, benchmarks were largely the domain of academic researchers. Think of them like an AI rite of passage: if you wanted your model to be taken seriously, it had to compete.

Today? Benchmarks have moved beyond academic curiosity. They are commercial, cultural, and competitive tools. VCs cite them. Enterprise buyers scrutinize them. Founders brag about them.

But not all benchmarks are created equal. Some test narrow capabilities like pronoun resolution. Others require reasoning across multiple modalities. Some are static, others dynamic. And many have quirks that can trick both developers and customers into thinking a model is better, or worse, than it truly is.

To understand what benchmarks tell us, let’s walk through the major ones that shape today’s LLM landscape.

Benchmark Categories: Made With GPT-1

Core Knowledge & Reasoning Benchmarks

These benchmarks are the bread and butter for any serious LLM. They tell us: Does the model understand the world, and can it reason about it?

AI2 Reasoning Challenge (ARC)

What it is: Grade-school science questions designed to require reasoning beyond simple recall.

Tests: Commonsense and multi-step reasoning.

Why it matters: Early language models could memorize facts. ARC forces them to think.

The AI2 Reasoning Challenge (ARC) is a benchmark designed to test the advanced reasoning abilities of AI models, not just their ability to recall facts:

- It was created by the Allen Institute for Artificial Intelligence (AI2).

- It focuses on grade-school science questions, but importantly, the questions are selected to be non-trivial. That is, a model can't simply memorize facts to pass.

- Numerous questions necessitate multi-step reasoning, commonsense knowledge, or implicit background understanding.

The ARC dataset contains 7,787 multiple-choice science questions drawn from publicly available grade-school science exams, mostly at the U.S. 3rd to 9th grade level. It’s divided into two subsets:

Many top models, such as GPT-4 and DeepSeek R1, cite their ARC scores to prove improvements in real-world reasoning, not just text generation.

HellaSwag

What it is: Sentence completion tasks grounded in commonsense reasoning.

Tests: Natural language inference and commonsense reasoning.

Why it matters: It’s easy to finish a sentence. It’s challenging to finish it in a way that makes sense to humans.

HellaSwag is a commonsense reasoning benchmark designed to be challenging for AI models, especially older ones that relied heavily on surface-level patterns rather than true reasoning.

It tests a model’s ability to choose the most plausible next sentence given a short context.

HellaSwag isn’t about completing a sentence grammatically. It’s about completing it logically and meaningfully, just like a human would.

The HellaSwag dataset was built by adversarial filtering on a mix of sources:

Key points about the dataset:

- It contains about 60K rows.

- Each question has one context and four choices (one correct, three highly deceptive wrong ones).

- The wrong answers were generated automatically, then filtered using models like BERT to select the "hardest" wrong ones.

Top models like GPT-4 use this benchmark:

MMLU (Massive Multitask Language Understanding)

What it is: 57 subject areas ranging from STEM to humanities.

Tests: Broad knowledge and reasoning.

Why it matters: MMLU became a default benchmark because it simulates the diversity of real-world knowledge work.

MMLU is one of the most important and widely cited benchmarks for evaluating large language models (LLMs) across a very broad range of subjects.

- It tests a model's ability to answer multiple-choice questions covering 57 diverse fields.

- These fields include STEM, social sciences, and the humanities, plus professional-level subjects like medicine and law.

MMLU is a specially curated benchmark made up of 15,908 multiple-choice questions.

- It pulls from a wide range of real academic and professional exam questions, educational resources, certification materials, and textbooks.

- It covers 4 levels of difficulty:

- Elementary school

- High school

- College

- Professional/expert

Top models like Qwen and Llama include this benchmark in their releases:

TruthfulQA

What it is: Questions designed to trick models into repeating falsehoods.

Tests: Factuality and resistance to misinformation.

Why it matters: It reveals whether a model simply reflects internet data or can resist it.

TruthfulQA is a benchmark specifically designed to test how truthful and factual a language model is, especially when faced with questions that might tempt it to hallucinate or repeat common misconceptions.

- The questions are intentionally tricky: they often resemble real-world myths, conspiracies, or misleading ideas found on the internet.

- The goal isn’t just to answer correctly; it’s to resist answering incorrectly in plausible but wrong ways.

The TruthfulQA dataset includes:

Below, you can see how different models perform on this benchmark as provided by the GPT-4 release blog:

Winogrande

What it is: Pronoun resolution requiring commonsense reasoning.

Tests: Coreference resolution.

Why it matters: Even powerful models can stumble on deceptively simple tasks like deciding who "he" refers to.

Winogrande is a commonsense reasoning benchmark that focuses specifically on coreference resolution, figuring out what or who a pronoun like “he,” “she,” “it,” or “they” refers to in a sentence.

- It’s based on the famous Winograd Schema Challenge, which was created to be a Turing Test alternative, meaning, if an AI could solve it consistently, it would be showing true commonsense reasoning.

- Winogrande scales up that challenge massively. It’s much bigger, noisier, and deliberately tougher to prevent models from using shallow tricks.

Winogrande tests whether a model can understand context and use common sense to resolve ambiguities.

The Winogrande dataset:

Each question gives a sentence with a blank ("___") and two answer choices.

The model must pick the word (person/object) that makes the sentence correct and natural.

The figure below shows GPT-4’s score on this benchmark.

Math & Logic Benchmarks

Models that can write poems are nice. Models that can reason mathematically? That's a whole new level.

GSM8k

What it is: Grade-school math word problems.

Tests: Arithmetic and multi-step reasoning.

Why it matters: A strong GSM8k score is a leading indicator for mathematical reasoning and stepwise problem solving.

GSM8k stands for Grade School Math 8k. It’s a benchmark designed to test an LLM’s ability to solve basic arithmetic and multi-step math word problems, the kind you'd find in a grade-school curriculum.

- It’s not simple trivia (like "what’s 2+2").

- Each question typically requires multiple steps of reasoning, careful reading, and accurate arithmetic.

GSM8k tests whether a model can think through small, logical steps, not just memorize math facts.

The GSM8k dataset:

MATH (Mathematics Aptitude Test of Heuristics) Level 5

What it is: High-school competition math at the hardest level.

Tests: Advanced mathematical reasoning.

Why it matters: Excelling here signals that a model can move beyond memorization to problem-solving creativity.

The MATH benchmark (sometimes just called the "MATH dataset") is a high-school competition-level math benchmark designed to push LLMs much harder than simple arithmetic.

- It consists of real math competition problems, the kind you’d see in contests like the AMC (American Mathematics Competitions), AIME (American Invitational Mathematics Examination), or national/international olympiad qualifiers.

- These problems require multiple steps of abstract reasoning, symbolic manipulation, and problem-solving heuristics, not just number crunching.

The MATH dataset:

MATH is harder than GSM8K, making it must have for models that claim to have true mathematical reasoning ability, not just memorization

GPQA (Graduate-Level Google-Proof Q&A)

What it is: Tough questions designed to defeat simple search-based answers.

Tests: Complex question answering.

Why it matters: Tests whether models can think, not just retrieve.

GPQA is a high-level question-answering benchmark that challenges models with very difficult, expert-level questions that are not easily answerable by simple internet searches.

The questions are graduate-level, meaning they cover specialized domains like advanced biology, physics, philosophy, and medicine, not basic general knowledge.

The GPQA dataset:

GPQA Diamond

What it is: An even harder version of GPQA.

Tests: Expert-level reasoning.

Why it matters: For testing frontier models that claim to surpass graduate-level understanding.

GPQA Diamond is an even harder variant of the original GPQA (Graduate-Level Google-Proof Q&A) benchmark.

- It focuses on the most difficult questions from GPQA, the "diamond-hard" tier.

- These questions require not only deep knowledge but also advanced reasoning, multi-hop inference, and sometimes even implicit background understanding that isn't stated outright.

If GPQA tests whether a model can reason like a graduate student, GPQA Diamond tests whether it can reason like a top graduate student or an entry-level researcher.

The GPQA Diamond dataset:

Here is how some of the top models, such as GPT-4.1, perform on this benchmark:

MathVista

What it is: Math problems that also require visual understanding.

Tests: Math + visual reasoning.

Why it matters: Many real-world math problems (finance, engineering, science) are graphically presented.

MathVista is a visual math reasoning benchmark, meaning it tests a model’s ability to solve math problems that require understanding images (like charts, graphs, or diagrams), not just text.

- It combines mathematical reasoning with visual perception.

- Models must read an image, extract relevant information, and reason mathematically to answer correctly.

- It’s designed for multimodal LLMs that can process both text and images together.

MathVista asks: Can your model see, understand, and reason mathematically, not just read text?

The MathVista dataset:

Pass@1 on AIME24

What it is: Accuracy on the American Invitational Math Exam 2024.

Tests: Mathematical problem-solving.

Why it matters: It's become a go-to metric for math-heavy models like Qwen-Math and DeepSeek-Math.

Pass@1 on AIME24 is a specialized math benchmark that measures how accurately an LLM can solve difficult competition-level math problems, specifically from the AIME 2024 exam.

Let's break this down:

- AIME stands for the American Invitational Mathematics Examination.

- It's a prestigious U.S. high school math competition, much harder than regular classroom math.

- AIME problems require multi-step problem solving, abstract reasoning, careful planning, and often creative insights.

- Pass@1 means: How often does the model get the correct answer on its first try?

Pass@1 on AIME24 measures first-shot success on some of the hardest math questions a model can face.

Instruction Following & Multi-Step Reasoning

Reasoning is the new frontier for LLMs. These benchmarks push beyond knowledge to capabilities.

Instruction-Following Evaluation (IFEval)

What it is: Tests adherence to detailed instructions.

Tests: Instruction following.

Why it matters: Enterprise models must follow complex workflows reliably.

IFEval is a benchmark specifically designed to measure how well large language models (LLMs) follow complex, detailed instructions.

- It’s not about knowledge.

- It’s not about common sense.

- The only question is: Can the model comprehend a lengthy, multi-part instruction and perform the task exactly as instructed?

Such clarity is important for real-world deployments, especially in enterprise, agentic AI, and automated workflows, where a small misunderstanding can break an entire chain of tasks.

IFEval tests precision in instruction following, not creativity or reasoning.

Multistep Soft Reasoning (MuSR)

What it is: Narrative reasoning requiring multiple inference steps.

Tests: Multi-step reasoning.

Why it matters: Simulates the kinds of multi-turn tasks found in real-world agentic workflows.

MuSR is a benchmark designed to test whether language models can reason across multiple steps, but in a soft, narrative, and fuzzy way, rather than in strict, symbolic math.

- "Multistep" means the model has to perform several connected inferences to reach the right answer.

- "Soft" means the reasoning is narrative, contextual, or commonsense-driven, not rigid math or formal logic.

- Typically, MuSR tasks involve story-like scenarios where the model must connect dots that aren’t spelled out explicitly.

MuSR tests if a model can “think through” real-world, common-sense problems by chaining reasoning steps inside a story or situation.

The MuSR dataset:

Big Bench Hard (BBH)

What it is: A curated subset of especially difficult BIG-Bench tasks.

Tests: Multi-domain reasoning.

Why it matters: Serves as a stress test for general reasoning across tasks.

Big Bench Hard (BBH) is a curated benchmark made up of the most challenging tasks from the massive BIG-bench project.

- BIG-bench (Beyond the Imitation Game Benchmark) was a huge community effort: over 200 tasks were created by researchers across AI, cognitive science, linguistics, and philosophy.

- BBH selects only the hardest subset of those tasks, the ones that:

- Humans perform very well on (80–90%+),

- Models historically perform poorly (well below human levels).

BBH focuses on tasks where reasoning, creativity, and complex thinking are necessary; shallow memorization or surface tricks do not work.

Big Bench Hard is a multi-domain, adversarial stress test for serious reasoning ability across wildly diverse problem types.

The BBH dataset:

MMLU-Pro

What it is: A harder variant of MMLU with an emphasis on reasoning.

Tests: Professional-level knowledge and reasoning.

Why it matters: Distinguishes casual models from ones ready for specialized professional use.

MMLU-Pro (Massive Multitask Language Understanding - Pro) is an advanced, harder version of MMLU.

- It’s designed to raise the bar beyond the original MMLU.

- While MMLU focuses on broad academic and professional knowledge across 57 subjects, MMLU-Pro:

- Increases the difficulty of questions,

- Shifts toward reasoning-heavy questions, rather than simple fact recall.

- Reduces surface cue answerability, meaning models can't rely on recognizing shallow keyword matches.

MMLU-Pro tests whether a model can combine deep domain knowledge with real logical reasoning, not just retrieve memorized information.

The MMLU-Pro dataset:

The figure below from the official release blog of Qwen 3 shows the performance of various models on the MMLU-Pro benchmark and other benchmarks.

MTOB

What it is: Multi-turn open-book question answering.

Tests: Multi-turn reasoning and information retrieval.

Why it matters: Crucial for evaluating models designed for research assistants, legal analysis, and technical support.

MTOB stands for Multi-Turn Open-Book Question Answering. It’s a benchmark designed to test a model’s ability to:

- Handle multiple conversational turns (not just one-shot Q&A),

- Retrieve and integrate information across multiple documents or sources ("open-book"),

- Maintain consistency and memory across a dialogue.

MTOB measures whether a model can act like a thoughtful, multi-step research assistant, not just a one-off question-answer machine.

The MTOB dataset:

Visual & Multimodal Reasoning

Multimodal models are the next big leap. But evaluation? That’s still catching up.

ChartQA

What it is: Question answering over charts.

Tests: Data interpretation and visual reasoning.

Why it matters: Many enterprise workflows involve interpreting charts and graphs.

ChartQA is a visual question-answering benchmark focused specifically on interpreting charts, graphs, and tables.

- It tests if a model can look at a chart; bar graphs, pie charts, and tables, and reason about the data to answer a question.

- Unlike MathVista (which mixes math and visual reasoning), ChartQA is purely about data understanding and interpretation.

ChartQA measures whether a model can read, understand, and reason based on structured visual data, not just text.

DOCVQA

What it is: Visual question answering over document images.

Tests: Document understanding.

Why it matters: Vital for industries like law, finance, and healthcare, where document comprehension is key.

DOCVQA stands for Document Visual Question Answering. It tests a model’s ability to read, understand, and answer questions about scanned documents, like forms, invoices, contracts, scientific papers, receipts, manuals, and more.

The questions require models to extract information from structured layouts (tables, headers, footnotes, small fonts), not just read continuous text.

DOCVQA measures if an AI can actually "understand" messy real-world documents the way humans do, combining vision, reading, and reasoning.

The DOCVQA dataset:

MutiLF

What it is: Multi-turn, multi-modal information fusion tasks.

Tests: Multimodal reasoning.

Why it matters: It tests the cutting edge, where models must reason across text, image, and data inputs.

MutiLF stands for Multi-turn, Multi-modal Information Fusion.

- It's a complex, multi-modal benchmark that evaluates a model’s ability to fuse information across text and visual data over multiple conversational turns.

- In other words, it's not just about reading an image or answering a one-off question; it’s about reasoning through a dialogue while combining different modalities (text, images, tables, graphs).

MutiLF tests whether a model can hold a multi-step conversation that involves pulling together information from multiple types of input, text, vision, charts, tables, and reason about them coherently.

The MutiLF dataset:

DROP = Discrete Reasoning Over Paragraphs

The DROP benchmark is a reading comprehension dataset specifically designed to test a model's discrete reasoning skills, multi-step inference, and ability to manipulate numbers, not just surface-level text matching.

It was created by researchers from:

- UC Irvine

- Peking University

- Allen Institute for AI

- University of Washington

DROP forces the model to reason over information in the passage, unlike traditional QA (Question Answering) datasets like SQuAD, where simple span extraction from text is often enough.

In other words, the model must understand the paragraph and reason over it, not just find matching words.

Coding & Problem-Solving

Coding benchmarks have become essential for measuring reasoning and utility in programming.

Live Code Bench

What it is: Real-time code generation and execution.

Tests: Coding ability and debugging.

Why it matters: Passes the "Can it code?" sniff test.

Live Code Bench is a benchmark specifically designed to evaluate how well large language models (LLMs) can write and execute code live. Unlike static code benchmarks, Live Code Bench evaluates a model’s ability to iteratively generate, debug, and refine code in response to feedback, very similar to how a human developer would solve coding tasks.

It tests interactive coding agents, not just single-shot code generation.

This makes it particularly useful for evaluating agentic coding models like Devin or GPT-based autonomous coding assistants, which are designed to:

- Write the initial code

- Execute it

- Interpret error messages

- Iterate on the solution until the task is complete

Live Code Bench tests real-world coding workflows where models aren’t expected to write perfect code on the first try but rather solve problems through a loop of code–execute–debug–refine.

Live Code Bench draws from real-world coding problems, particularly those that require multi-step reasoning and iterative debugging. Similar benchmarks typically use:

- Modified versions of HumanEval (created by OpenAI)

- MBPP (Mostly Basic Python Problems)

- Real-world coding challenges curated to test iterative problem-solving and error handling, not just simple code completion

CodeForces

What it is: Algorithmic problem-solving from competitive programming.

Tests: Coding and algorithmic reasoning.

Why it matters: Reflects real-world coding complexity beyond simple "autocomplete."

The Codeforces LLM Benchmark (CF-LLM) is a coding benchmark designed to evaluate how well large language models (LLMs) can solve real-world competitive programming problems.

It was created by DeepMind and collaborators in 2024 to address a key gap:

- Most older coding benchmarks (like HumanEval and MBPP) focus on small, simple problems.

- Codeforces problems are much more realistic, complex, longer, and require deeper reasoning.

So, CF-LLM tests if LLMs can compete with human coders on hard, contest-level problems.

The benchmark pulls problems from Codeforces, one of the world’s largest competitive programming platforms.

Dataset details:

- Problems from Codeforces.

- Problems are selected across a wide range of difficulty ratings:

- From beginner problems up to expert/master level.

- Each problem comes with:

- The problem statement.

- Hidden test cases (to prevent overfitting during training).

- Public test cases for basic validation.

CF-LLM measures:

- Pass@k accuracy: Can the model generate a correct solution within k attempts?

- Difficulty-aware scoring: Harder problems count more in the final score.

- Comparison to human performance: Models are compared to actual contestant performance on the same problems.

AlphaCode 2 was evaluated on a curated Codeforces benchmark and achieved a performance equivalent to the top 15%–10% of real competitive programmers, a significant improvement over the original AlphaCode model.

Aider@Pass1

What it is: Pass@1 code generation accuracy.

Tests: Code correctness on first try.

Why it matters: Simpler than Live Code Bench, but a good leading indicator for model coding reliability.

Aider@Pass1 is a large language model (LLM) benchmark designed to evaluate coding abilities, specifically how often an LLM can solve programming problems correctly on the first try.

- "Pass@1" means what percentage of problems the model solves correctly on its first attempt.

- "Aider" is a coding assistant that helps programmers write, edit, and fix code using natural language commands.

As a result, Aider@Pass1 assesses an LLM's capacity to function as a real coding assistant, not just to "generate code," but to complete realistic programming tasks in one go without the need for retries or significant corrections.

The Aider@Pass1 benchmark dataset comes from:

- Real-world GitHub issues and pull requests

- Typical coding assistant tasks, like:

- Add a feature to the existing code

- Fix a bug

- Refactor code for performance or readability

- Update code to new library/API versions

- Sourced problems across multiple programming languages: Python, JavaScript, Go, etc.

HumanEval

HumanEval is one of the most important and widely used LLM benchmarks for evaluating code generation.

It was originally introduced by OpenAI (in the Codex paper, 2021) to test whether a model can:

- Write correct, executable code

- Given a natural language description of a programming problem

You give the model a function signature and a text description of what the function should do. The model must then write the correct function, no hints, no multiple tries, just one clean solution.

The HumanEval dataset is a collection of:

- 164 Python programming problems

- Each problem includes:

- A function signature

- A natural language prompt

- Hidden unit tests

The problems cover a range of difficulty levels, from very easy to moderately challenging, and test skills like:

- Basic math

- String manipulation

- List operations

- Recursion

- Simple algorithms (sorting, searching)

The main metric is Pass@k:

- Pass@1: Did the model generate a correct solution on its first try?

- Pass@5: If the model generates 5 different completions, does at least one pass all test cases?

HumanEval is like a "coding interview" test for AI models, but judged automatically by running the code!

Meta & Composite Evaluations

Some benchmarks don’t test a skill; they test how well models compare overall.

ELO on lmarena.ai

What it is:A Dynamic pairwise rating system.

Tests: Overall comparative performance.

Why it matters: Offers a constantly updated view of model rankings in real-world scenarios.

Lmarena.ai is a new LLM benchmark platform that ranks large language models (LLMs) by head-to-head battles, not static benchmarks like MMLU, GSM8K, etc.

At the center of Lmarena is the ELO system, the same idea used in chess rankings:

- Each LLM starts with an initial ELO score.

- Two LLMs are given the same task.

- Human or automated judging (sometimes judged by another strong model) picks the better answer.

- The winner gains ELO points; the loser loses points.

ArenaHard

What it is: Especially difficult evaluation tasks.

Tests: Model robustness.

Why it matters: For testing models at their limits, where subtle reasoning or hallucination control is required.

ArenaHard is a benchmark designed to test the hardest cases where language models struggle, even when they are already very strong.

It was created as a harder version of the LMSYS Chatbot Arena battles (which use ELO to rank LLMs like GPT-4, Claude, Gemini, etc.).

- ArenaHard selects only the most difficult prompts, specifically, the ones that even top models fail.

- Instead of using all prompts like the normal Chatbot Arena, ArenaHard focuses on the "hard subset".

- This forces models to be judged based on their ability to handle tough problems like:

- Deep logical reasoning

- Multi-step problem solving

- Long-form coherent writing

- Difficult coding challenges

- Subtle factual accuracy and hallucination resistance

ArenaHard is not about "being good" in general; it’s about being good when it’s hardest.

Other benchmarks worth noting are:

- AMC23, which includes 40 problems from the 2023 American Mathematics.

- Minerva, which includes 272 quantitative reasoning problems at the undergraduate level.

- MT-Bench, a benchmark designed to evaluate how well large language models (LLMs) perform in multi-turn conversations. It tests a model’s ability to handle dialogue coherence, instruction following, reasoning, and helpfulness over several back-and-forth turns, not just single responses. MT-Bench uses a mix of human-written prompts and GPT-4 judged responses to score models across realistic, conversational tasks.

Why Benchmarks Aren’t Enough

Benchmarks are essential. But they are not sufficient.

Here’s why:

- Static vs. dynamic performanceBenchmarks test frozen capabilities. In production, models must adapt, especially in agentic or retrieval-augmented (RAG) scenarios.

- Length bias and evaluation biasHuman and LLM judges often prefer longer responses even when they’re worse. Models can game this.

- Prompt dependenceBenchmark prompts may not reflect production use cases. In the wild, instructions can be ambiguous or contradictory.

- Overfitting risks Some models are tuned specifically to excel at benchmark tasks (leaderboard chasing) rather than generalize to new tasks.

- Lack of preference alignmentBenchmarks test performance on paper. They don’t test whether the model’s outputs align with the end-user’s preferences, goals, or values.

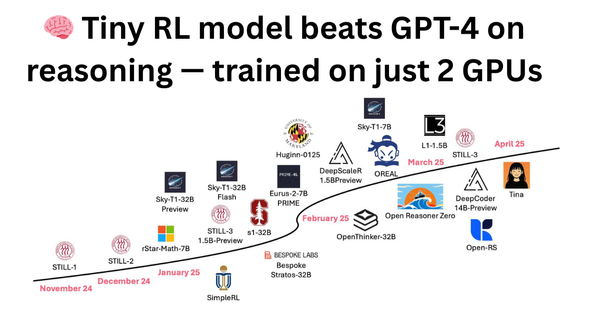

The Rise of Preference Optimization

The new frontier isn’t just beating benchmarks; it’s tuning models to align with user preferences and dynamic use cases.

Techniques like:

- Reinforcement Learning from Human Feedback (RLHF)

- Reinforcement Learning from AI Feedback (RLAIF)

- Direct Preference Optimization (DPO)

- Proximal Policy Optimization (PPO)

- Group Relative Policy Optimization (GRPO)

…are now standard for top-performing models. They allow models not just to score well on benchmarks but also to behave well in production.

In fact, in head-to-head comparisons, models fine-tuned with reinforcement learning often outperform those that excel at static benchmarks but lack preference alignment.

Enterprise Implications

For businesses deploying LLMs, understanding benchmark performance is only the first step.

To succeed in the real world, enterprises must:

- Evaluate beyond benchmarks. Consider user satisfaction, latency, cost per query, and robustness.

- Fine-tune for preferences. Use RLHF, RLAIF, or similar methods to align models to business needs.

- Monitor dynamically.Track model drift, performance under changing prompts, and alignment to KPIs.

- Beware of overfitting.Prioritize generalization, not just leaderboard rankings.

Final Thoughts

Benchmarks have been indispensable for tracking the rapid evolution of LLMs. They’ve helped us quantify progress and push boundaries.

However, they serve as the beginning rather than the end.

As reasoning becomes the new competitive frontier and as enterprises demand not just accuracy but helpfulness and safety in complex, multi-modal, multi-turn scenarios, a model’s ability to perform on benchmarks will matter less than its ability to learn, adapt, and align over time.

That’s why the broader GenAI industry are increasingly focused not just on winning the next leaderboard, but on building models that continuously improve, understand preferences, and excel in the unpredictable, high-stakes real world.

Because at the end of the day, your customers don’t care about MMLU.They care about whether the model works for their use case.